Shiela, Camilla, Sheila and Vicky moved the AOM and realigned in 80830.

Summary: Today we measured the powers on SQZT0, see attached photo. Aimed to understand where we were loosing our green light as we had to turn down the OPO setpoint last week 82787. Adjusted SHG EOM, AOM and fiber pointing are dramatically increased available green light. OPO setpoint back to 80uW with plenty of extra green avaible.

After taking the attached power measurements we could see we the SHG conversion was fine, 130mW green out of SHG for 360mW of IR in.

We were loosing a lot of power through the EOM 96mW in to 76mW out (expect ~100%). We could see this clipping in yaw by taking a photo. Sheila adjusted the EOM alignment increasing the power out to 95mW.

Then AOM and fiber aligned following

72081:

1) set the ISS drive point at 0V to make only 0th order beam and check 90% of AOM throughput with power meter. Started with 95mW in, 50mW out. Improved to 95mW in 80mW out = 84%.

2) set the ISS drive point at 5V and align the AOM to maximize the 1st order beam (which is left side of 0th order beam looking from the laser side of SQZT0). After the AOM alignment, the 1st order beam was 27.5 mW and the 0th order beam was 49 mW. We measured again the AOM throughput including both 0th and 1st order beam. The AOM throughput was 77.5mW/93mW = 83%.

3) set the ISS drive point at 5V and align the fiber by maximizing the H1:SQZ-OPO_REFL_DC_POWER. I needed to adjust the SHG waveplate to reduce power to stop PD saturating

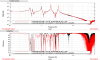

After this alignment, the SHG output is 42.7 mW, the pump going to fiber is 20.5 mW, and the rejected power is 2.7 mW. The ISS can be locked with OPO trans of 80uW while the ISS control monitor is 7.0. Also checked the OPO temperature.

Note that depending on the SHG PZT voltage, the power does change. Maybe cause by an alignment change form the PZT.

As ISS control monitor is 7.0 is a little high with 26.5mW SHG launched (OPO unlocked), I further turned the SHG launch power down to 20mW and adjusted the HAM7 rejected light. ISS control monitor left at 5.4 which is closer to the middle of the 0-10V range.