SQZ Morning Troubles

After reading Tony's alog, I had the suspicion that this was the SHG power being too low, causing lock issues. I trended Sheila's SHG Power while adjusting the SHG temp to maximize. This worked to lock SQZ almost immediately - screenshot below.

However, despite SQZ locking, it looked like in trying to lock for hours the SQZ_ANG_ASC had gone awry and as the wiki put it, "ran away" I requested the reset SQZ_ANG guardian but this didn't work. Then, I saw another SQZ Angle reset guardian, called "Reset_SQZ_ANG_FDS", which immediately broke guardian. The node was in error and a bunch of settings in ZM4 abd ZM5 got changed. I reloaded the SQZ guardian and successfully got back to FREQ_DEP_SQZ. I saw there were some SDF changes that had occured so I undid them, successfully making guardian complain less and less. Then, there were 2 TCS SDF OFFSETs that were ZM4/5 M2 SAMS OFFSET and they were off by 100V, sitting at their max 200V. I recalled that when SQZ would drop out, it would notify something along the lines of "is this thing on?". I then made the assumption that because this slider was at its max, all I'd need to do was revert this change, whic happened over 3hrs ago. I did that and then we lost lock immediately.

What I think happened in order:

- We get to NLN, SQZ FC can't lock due to low SHG power. It had degraded from Sheila and I's set point of 2.2 to a further 1.45.

- Guardian maxes out its ability to attempt locking by using ZMs and SQZ Ang ASC (if those aren't the same thing) and sits there, not having FC locked and not being able to move any more.

- Tony gets called and realizes the issue and can only get to a certain level before LOCK_LO_FDS rails, I assumer due to the above change. This means that even if we readjust the power, the ZM sliders would have to be adjusted.

- I get to site, change the temp to maximize SHG power and SQZ locks but at this weird state where ZM5 and ZM4 have saturated M2 SAMS OFFSETS. I readjust but the slider difference is so violent, it causes a lockloss.

Now, I'm relocking, having reverted the M2 SAM OFFSETs that guardian made and having maximized the SHG Temp power (which interestingly had to go down contrary to yesterday). I've also attached as screenshot of the SDF change related to the AWC-SM4_M2_SAMS_OFFSET because I'm about to rever the change on the assumption it is erroneous.

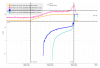

What I find interesting is that lowering the SHG temp increases the SHG power first linearly, then after some arbitrary value, exponentially but only for one big and final jump (kind of like a temperature resonance if you can excuse my science fiction). You can see this in the SHG DC POWERMON trend that shows a huge final jump.