Mayank, Sheila, Jennie W, Ryan S, Elenna, Jenne, Camilla, Robert.

Follow on from 82401, mostly copied Jenne's 77968.

As soon as we started setup, the IFO unlocked from an EQ and we decided to do this with the IFO unlocked.

Ryan locked green arms in initial alignment and offloaded. Took ISC_LOCK to PR2_SPOT_MOVE (when you move PR3 it calculates and moves PR2, PRM and IM4).

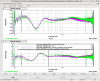

Green beatnotes were low but improved when we started moving. Steps taken: Move PR3 Yaw with sliders until ALS_C_COMM_A beatnote decreased to ~-14 and then used pico A_8_X to bring it back. Repeated until PR3 M1 pitch was 1-2urad off and then Mayank brought back pitch with PR3 sliders. Repeated moving PR3 yaw sliders and picos.

Started with PR3 (Pitch,Yaw) at (-122, 96), went to (-125.9, 39.5), were aiming for Yaw at -34. But, at (-124, 68) we lost the beam on AS_AIR, whoops.

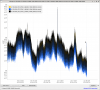

Once we realized that we fell off AS_AIR so took PR3 back to last time we had light on it (68urad in yaw slider), ignoring green arms with the plan of moving back to 38urad by moving SR2 to keep light on AS_AIR. Moved SR2 in single bounce (ITMY misaligned) to increase light on AS_AIR. We couldn’t go any further in PR3 yaw with keeping light on AS AIR so we decided to revert green picos to work with 68urad on PR3. WE took PR3 back to (-125.9, 39.5) and reversed our steps of sliders and picos.

After we got here, Ryan offloaded green arms and we tried to go to initial alignment. No flashes on init align in X arm or y-arm (touched BS for y-arm). We would usually be able to improve alignment while watching AS_AIR but the beam wasn’t clearly on AS_AIR. Improved beam on AS_AIR by moving SR2/3.

Ran SR2 align in ALIGN_IFO GRD. This seemed to make some clipping worse, are we clipping in SR2? Still working on SR2 alignment. Maybe we should update the ISC_LOCK PR2_SPOT_MOVE state to have SR2/3 follow align so that we don't loose AS_C and AS_AIR.