J. Kissel, E. Goetz, L. Dartez

As mentioned briefly in LHO:82329 -- after discovering that there is a significant amount of aliasing from the 524 kHz version of the OMC DCPD signals when down-sampled to 16 kHz -- Louis and Evan tried a versions of the (test, pick-off, A1, A2, B1, and B2) DCPD signal path with two copies, each, of the existing 524 kHz to 65kHz and 65 kHz to 16 kHz AA filters as opposed to one. In this aLOG, I'll refer to these filters as "Dec65k" and "Dec16k," or for short in the plots attached "65k" and "16k."

Just restating the conclusion from LHO:82329 :: Having two copies of these filters -- and thus a factor of 10x more suppression in the 8 to 32kHz region and 100x more suppression in the 32 to 232 kHz region -- seems to dramatically improve the amount of aliasing.

Recall these filters were designed with lots of compromises in mind -- see all the details in G2202011.

Upon discussion of applying this "why don't we just add MOAR FIRE" option 2xDec65k and 2xDec16k option for the primary signal path, there was concerns about

- DARM open loop gain phase margin, and

- Computational turn-around time for the h1iopomc0 front-end process.

I attach two plots to help facilitate that discussion,

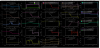

(1st attachment) Bode plot of various combinations of the Dec65k and Dec16k filters.

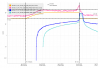

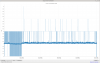

(2nd attachment) Plot of the CPU timing meter over the weekend, the during in which these filters were installed and ON in the 4x test banks on the same computer.

For (1st) :: Here we show several of the high-frequency suppression above 1000 Hz, and phase loss around 100 Hz for a couple of simple combinations of filtering. The weekend configuration of two copies of the 65k and 16k filters is shown in BLACK, the nominal configuration of one copy is shown in RED. In short -- all these combinations incur less than 5 deg phase loss around the DARM UGF. Louis is going do some modeling to show the impact of these combinations on the DARM loop stability via plots of open loop gain and loop suppression. We anecdotally remember that the phase margin is "pretty tight," sub-30 [deg], but we'll wait for the plots.

For (2nd) :: With the weekend configuration of filters, with eight more filters (the copies of the 65k and 16k, copied 4 times in each of the A1, A2, B1, B2 banks) installed and running, the extremes of CPU clock cycle turnaround time did increase, from "never above 13 [usec]" to "occasionally hitting 14 [usec]" out of the ideal 1/2^16 = 15.26 [usec], which is rounded up on the GDSTP MEDM screen to be an even 16 [usec]. This is to say, that "we can probably run with 4 more filters in the A0 and B0 banks," though that may necessarily limit how much filtering can be in the A1, A2, B1, B2 banks for future testing. Also, no one has really looked at what happens to the gravitational wave channel when the timing of the CPU changes, or gets near the ideal clock-cycle time, namely the basic question "Are there glitches in the GW data when the CPU runs longer than normal?"

I dropped Observing from 00:49 - 00:56 to adjust the SQZer, I brought H1:SQZ-ADF_OMC_TRANS_PHASE back to -136 alog82421 and then after the servo was done I adjusted the OPO temperature. I accepted the new phase in SDF.