Latest Calibration:

gpstime;python /ligo/groups/cal/src/simulines/simulines/simuLines.py -i /ligo/groups/cal/H1/simulines_settings/newDARM_20231221/settings_h1_newDARM_scaled_by_drivealign_20231221_factor_p1.ini;gpstime

notification: end of measurement

notification: end of test

diag> save /ligo/groups/cal/H1/measurements/PCALY2DARM_BB/PCALY2DARM_BB_20250116T163130Z.xml

/ligo/groups/cal/H1/measurements/PCALY2DARM_BB/PCALY2DARM_BB_20250116T163130Z.xml saved

diag> quit

EXIT KERNEL

2025-01-16 08:36:40,405 bb measurement complete.

2025-01-16 08:36:40,405 bb output: /ligo/groups/cal/H1/measurements/PCALY2DARM_BB/PCALY2DARM_BB_20250116T163130Z.xml

2025-01-16 08:36:40,405 all measurements complete.

gpstime;python /ligo/groups/cal/src/simulines/simulines/simuLines.py -i /ligo/groups/cal/H1/simulines_settings/newDARM_20231221/settings_h1_newDARM_scaled_by_drivealign_20231221_factor_p1.ini;gpstime

PST: 2025-01-16 08:40:33.517638 PST

UTC: 2025-01-16 16:40:33.517638 UTC

GPS: 1421080851.517638

2025-01-16 17:03:33,281 | INFO | 0 still running.

2025-01-16 17:03:33,281 | INFO | gathering data for a few more seconds

2025-01-16 17:03:39,283 | INFO | Finished gathering data. Data ends at 1421082236.0

2025-01-16 17:03:39,501 | INFO | It is SAFE TO RETURN TO OBSERVING now, whilst data is processed.

2025-01-16 17:03:39,501 | INFO | Commencing data processing.

025-01-16 17:03:39,501 | INFO | Ending lockloss monitor. This is either due to having completed the measurement, and this functionality being terminated; or because the whole process was aborted.

2025-01-16 17:04:16,833 | INFO | File written out to: /ligo/groups/cal/H1/measurements/DARMOLG_SS/DARMOLG_SS_20250116T164034Z.hdf5

2025-01-16 17:04:16,840 | INFO | File written out to: /ligo/groups/cal/H1/measurements/PCALY2DARM_SS/PCALY2DARM_SS_20250116T164034Z.hdf5

2025-01-16 17:04:16,845 | INFO | File written out to: /ligo/groups/cal/H1/measurements/SUSETMX_L1_SS/SUSETMX_L1_SS_20250116T164034Z.hdf5

2025-01-16 17:04:16,850 | INFO | File written out to: /ligo/groups/cal/H1/measurements/SUSETMX_L2_SS/SUSETMX_L2_SS_20250116T164034Z.hdf5

2025-01-16 17:04:16,854 | INFO | File written out to: /ligo/groups/cal/H1/measurements/SUSETMX_L3_SS/SUSETMX_L3_SS_20250116T164034Z.hdf5

ICE default IO error handler doing an exit(), pid = 1289567, errno = 32

PST: 2025-01-16 09:04:16.931501 PST

UTC: 2025-01-16 17:04:16.931501 UTC

GPS: 1421082274.931501

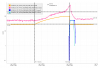

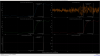

In the last two lock acquisitions, we've had the fast DARM and ETMY L2 glitch 10seconds after DARM1 FM1 was turned off. Plots attached from Jan 26th and zoom, and Jan 27th and zoom. Expect this means this fast glitch is from the FM1 turning off, but we've seen this glitch come and go in the past, e.g. 81638 where we though we fixed the glitch by never turning on DARM_FM1, but we still were turning FM1 on, just later in the lock sequence.

In the lock losses we saw on Jan 14th 82277 after the OMC change (plot), I don't see the fast glitch but there is a larger slower glitch that causes the lockloss. One thing to note different between that date and recently is that the counts of the SUS are double the size. We always have the large slow glitch, but when the ground is moving more we struggle to survive it? Did the 82263 h1omc change fix the fast glitch from FM1 turning off (that seems to come and go) and we were just unlucky with the slower glitch and high ground motion the day of the change?

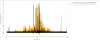

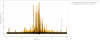

Can see from the attached microseism plot that it was much worse around Jan 14th than now.

Around 2025-01-21 22:29:23 UTC (gps 1421533781) there was a lock-loss in the ISC_LOCK state 557 that happened before FM1 was turned off.

It appears to have happend about 15 seconds after executing the code block where

self.counter == 2. This is about half way through the 31 second wait period before executing the self.counter == 3,4 blocks.See attached graph.