J. Kissel, T. Sanchez, E. Goetz, L. Dartez

*EDIT* This limitation is only pulling out data with test points from the A0/B0 filter outputs due to them being recorded in single precision, not double precision. The actual data for all internal calculations is double precisions, and in fact for the final calibrated gravitational wave strain is both calculated in, and then stored in frames as, double precision.

Back in July 2024 when I started to characterize the super-16 kHz-Nyquist frequency data off the OMC DCPDs with the live 524 kHz channels. See LHO:78516 for the whole story, but we got stalled when we ran into what we believed was some sort of single-precision, numerical precision noise, limited at the equivalent of 1e12 [A/rtHz] DCPD current or 1e-6 [V/rtHz] ADC voltage.

In LHO:78559, we ruled out single-point precision calculation of the ASD when we ran the same DCPD signal through a special version of DTT which uses double-precision to calculate the pwelch algorithm. That version of the data proved that the high frequency limitation is still there, and NOT the precision of DTT.

In that same data set, we also showed that if you ask DTT to remove the mean, i.e. large DC component of the signal, it also did NOT have any impact on this limit.

And it's in removing the mean that we reveal / confirm that it is "single-point precision" limit in the test point readbacks.

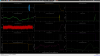

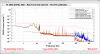

Check out 1st attachment which is the data from LHO:78559, but with no DTT calibration applied. That means the channels are calibrated into the units of whatever they are coming off of the front-end -- in this case milliamps, or [mA].

The DC value of the test point channel during the time of measurement was ~20 [mA].

The front-end computes all its filtering in double precision, but the readbacks of the products of those calculations are single-point precision. An IEEE 754 32-bit base-2 floating-point, single precision channel has 24 bits of significance (excluding the sign and exponent) to hold the entire frequency dependent content of the time-series that has a DC value of ~20 [mA]. The (front end filtering algorithm?) rounds the 20.43 DC component to the nearest 2^n value, i.e. 2^5 = 32 ["mA"].

Eq. 2.2 of Liquid instruments article on quantization noise suggests that the amplitude spectral density of 1 bit spread across the 0 to 2^18 Hz (f_Nyquist) frequency range over which we care is

n_{ASD,RMS} = sqrt( DELTA^2 / (12 * f_Nyquist) )

= DELTA * sqrt( 1 / 12 * 1/f_Nyquist )

where

- DELTA is the minimum step resolution (i.e. the peak value / number of significant bits),

- the factor or 1/12 comes from the expectation value of the noise power derived from the integral of the product of instantaneous noise power and the probability that that power is distributed across one, specifically the least significant, bit

(and we use the square root because we want the amplitude not the power)

- the factor of 1/f_Nyquist comes from spreading out the (presumably frequency independent) power over the entire frequency range

(and again, we use square root because we want the amplitude not the power)

In line-by-line math, that's

[[ 32 ["mA"_DC] peak value

* (1/2^24) significant bits in single precision) ]]

[[ 1 bit

* (1/sqrt(12)) expectation value quantization noise amplitude spread across 1 bit

* (1/sqrt(2^18 Hz)) quantization noise spread across 0 to Nyquist frequency range

]]

= [ 32 / (2^24) ] * sqrt[ 1 / (12 * 2^18) ]

= 1.07539868e-9 ["mA"/rtHz]

This number *exactly* the high-frequency asymptote we see. BINGO!

The next step was then to create pick-off paths of the ADC channels and high-pass -- i.e. remove the large DC component of the signal -- in the front-end. Importantly, this has to be the *first* filter, so the DC component is removed before any other calculation is done. We added the infrastructure and installed the filtering (LHO:78956, LHO:78975), but have not come back to the data until today.

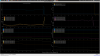

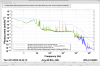

Today, we're finally looking at the front-end OMC DCPD data that's been high-pass filtered with a 5th order, 1 Hz high-pass, with 40 dB stop-band attenuation and a 1 dB ripple

ellip("HighPass",5,1,40,1)

The odd (5th) order means that DC component is completely suppressed, leaving only the remaining frequency-dependent accumulated RMS, which is 5.8901e-4 [mA_RMS] to upper limit of the dynamic range and define the precision limit.

SIDE QUEST -- Fractional numbers are much less intuitive to "just round up to the nearest power of 2^n," so the equivalent of "converting 20.43 [mA] to 32 ["mA"]" for 5.8901e-4 is instead a process of,

Converting 5.8901e-4 to floating point binary,

# sign exponent fraction

0 01110100 00110100110110111110111

# round up the fraction part

0 01110100 01000000000000000000000

# convert back to decimal

0.00061035156

That takes the quantization limit down to

= [ 6.1035156e-4 / (2^24) ] * sqrt[ 1 / (12 * 2^18) ]

= 2.05e-14 ["mA"/rtHz]

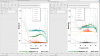

Take a look at 2nd attachment, which compares the normal nominal OMC DCPD data to this 1 Hz high passed data. One can see all of the AA filtered data all the way out to the 232 kHz Nyquist frequency, because that data only goes as low as 1e-13 [mA].

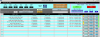

Finally in the last attachment, we re-cast this into ADC noise units, to show where the data against the trace we'd had as a bench mark before. Note *this* comparison is a false comparison because the digital AA filtering is applied after the ADC noise is added. So -- don't read anything into the fact that the resolved noise goes below the ADC noise -- it's just a guide to the eye and a bench mark to remind folks that the numerical precision limit is *not* ADC noise.

In conclusion, if we want to investigate the OMC DCPD data above 7 kHz with test points, we need to make sure to use a version where the data is high-passed significantly.

So, now we can actually begin doing that...