Ansel reported that a peak in DARM that interfered with the sensitivity of the Crab pulsar followed a similar time frequency path as a peak in the beam splitter microphone signal. I found that this was also the case on a shorter time scale and took advantage of the long down times last weekend to use a movable microphone to find the source of the peak. Microphone signals don’t usually show coherence with DARM even when they are causing noise, probably because the coherence length of the sound is smaller than the spacing between the coupling sites and the microphones, hence the importance of precise time-frequency paths.

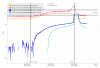

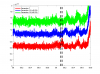

Figure 1 shows DARM and the problematic peak in microphone signals. The second page of Figure 1 shows the portable microphone signal at a location by the staging building and a location near the TCS chillers. I used accelerometers to confirm the microphone identification of the TCS chillers, and to distinguish between the two chillers (Figure 2).

I was surprised that the acoustic signal was so strong that I could see it at the staging building - when I found the signal outside, I assumed it was coming from some external HVAC component and spent quite a bit of time searching outside. I think that this may be because the suspended mezzanine (see photos on second page of Figure 2) acts as a sort of soundboard, helping couple the chiller vibrations to the air.

Any direct vibrational coupling can be solved by vibrationally isolating the chillers. This may even help with acoustic coupling if the soundboard theory is correct. We might try this first. However, the safest solution is to either try to change the load to move the peaks to a different frequency, or put the chillers on vibration isolation in the hallway of the cinder-block HVAC housing so that the stiff room blocks the low-frequency sound.

Reducing the coupling is another mitigation route. Vibrational coupling has apparently increased, so I think we should check jitter coupling at the DCPDs in case recent damage has made them more sensitive to beam spot position.

For next generation detectors, it might be a good idea to make the mechanical room of cinder blocks or equivalent to reduce acoustic coupling of the low frequency sources.