Sheila, writing for a large crew (Jason, Vicky, Daniel, Keita, Marc, Rick)

This morning we spent about 3 hours with the IMC offline and the ISS autolocker requested to off, (from 16:30 UTC) then unplugged the IMC feedback to the IMC VCO (from 17:08 UTC to 11:54 UTC) .

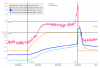

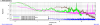

In the attached screenshot you can see that there are a few large disturbances during this time, which show up with an amplitude of 0.1 on the PMC mixer channel, and large oscillations in the FSS fast monitor (30 counts), dips in the reference cavity transmission, and glitches in the PMC high voltage. The second screenshot shows a zoomed in version of the problem times.

We also noticed that unplugging the feedback from the IMC to the VCO changed the reference cavity transmitted power, by about 1%, Daniel suggests that this might be OK because the change in laser frequency causes a small change in alignment out of the AOM.

We set the FSS autolocker to off at 19:54 UTC. This doesn't acutaly disable the FSS, but we can track times when the reference cavity would go through resonance by watching in the trans PD. At 21:19 UTC, Ryan Crouch started locking the IFO as the winds are supposed to be calm this afternoon (we did not see any glitches in this short test but need a longer one).

We will plan to continue this PMC only test overnight when the wind is supposed to come back up.