FAMIS 31053

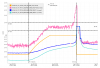

The NPRO power, amplifier powers, and a few of the laser diodes inside the amps look to have some drift over the past several days that follows each other, sometimes inversely. Looking at past checks, this relationship isn't new, but the NPRO power doesn't normally drift around this much. Since it's still not a huge difference, I'm mostly just noting it here and don't think it's a major issue.

The rise in PMC reflected power has definitely slowed down, and has even dropped noticeably in the past day or so by almost 0.5W.