RickS, FranciscoL, TonyS, JoeB, Dripta.

On Saturday, Sept. 21, 2024, the Pcal force coefficient EPICS record values were updated.

At X-end:

Old : H1:CAL-PCALX_FORCE_COEFF_RHO_T 8305.09

New : H1:CAL-PCALX_FORCE_COEFF_RHO_T 8300

Old : H1:CAL-PCALX_FORCE_COEFF_RHO_R 10716.6

New : H1:CAL-PCALX_FORCE_COEFF_RHO_R 10713.3

Old : H1:CAL-PCALX_FORCE_COEFF_TX_PD_ADC_BG 9.6571

New : H1:CAL-PCALX_FORCE_COEFF_TX_PD_ADC_BG 8.81815

Old : H1:CAL-PCALX_FORCE_COEFF_RX_PD_ADC_BG 0.7136

New : H1:CAL-PCALX_FORCE_COEFF_RX_PD_ADC_BG 0.56678

Old : H1:CAL-PCALX_FORCE_COEFF_TX_OPT_EFF_CORR 0.9938

New : H1:CAL-PCALX_FORCE_COEFF_TX_OPT_EFF_CORR 0.99331

Old : H1:CAL-PCALX_FORCE_COEFF_RX_OPT_EFF_CORR 0.9948

New : H1:CAL-PCALX_FORCE_COEFF_RX_OPT_EFF_CORR 0.9944

Old : H1:CAL-PCALX_XY_COMPARE_CORR_FACT 0.9991

New : H1:CAL-PCALX_XY_COMPARE_CORR_FACT 0.99855

At Y-End:

Old : H1:CAL-PCALY_FORCE_COEFF_RHO_T 7145.62

New : H1:CAL-PCALY_FORCE_COEFF_RHO_T 7155.15

Old : H1:CAL-PCALY_FORCE_COEFF_RHO_R 10649.6

New : H1:CAL-PCALY_FORCE_COEFF_RHO_R 10663.6

Old : H1:CAL-PCALY_FORCE_COEFF_TX_PD_ADC_BG 18.2388

New : H1:CAL-PCALY_FORCE_COEFF_TX_PD_ADC_BG 18.3088

Old : H1:CAL-PCALY_FORCE_COEFF_RX_PD_ADC_BG -0.2591

New : H1:CAL-PCALY_FORCE_COEFF_RX_PD_ADC_BG -0.7353

Old : H1:CAL-PCALY_FORCE_COEFF_TX_OPT_EFF_CORR 0.9923

New : H1:CAL-PCALY_FORCE_COEFF_TX_OPT_EFF_CORR 0.99191

Old : H1:CAL-PCALY_FORCE_COEFF_RX_OPT_EFF_CORR 0.9934

New : H1:CAL-PCALY_FORCE_COEFF_RX_OPT_EFF_CORR 0.9931

Old : H1:CAL-PCALY_XY_COMPARE_CORR_FACT 1.0005

New : H1:CAL-PCALY_XY_COMPARE_CORR_FACT 1.00092

The caput commands used for updating the ePICS records can be found here: https://git.ligo.org/Calibration/pcal/-/blob/master/O4/EPICS/results/CAPUT/Pcal_H1_CAPUTfile_O4brun_2024-09-16.txt?ref_type=heads

SDF diff has been accepted.

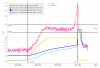

It has been roughly 4 months since the last update on May 2024. We plan to update the EPICS reords regularly, since over the course of a year , we observed that the Rx sensor calibration exhibits a systematic variation by as much as +/- 0.25 % at LHO. End station measurements allow us to monitor this systematic change over time. We will monitor the X/Y comparison factor to see if these EPICS changes had any significant effect on it. If the update is done correctly, there should be no significant change in the X/Y calibration comparison factor. If we find that there is a signifricant change in it, then we will correct the ePICS records accordingly.

Attached .pdf file shows the Rx calibration (ct/W) trend at LHOX and LHOY end for the entire O4 run. It marks the measurements used to calculate the Pcal force coefficients EPICS record values for the previous update at the start of the O4b run as well as the current update.

The pngs are the screenshots of the MEDM screen after the EPICS update.