I was able to get some more measurements done for the PRM and SRM estimators, so I'll note them and then summarize the current measurement filenames because there's a lot (previous measurements taken 87801 and 87950)

PRM

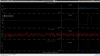

DAMP Y gain @ 20%

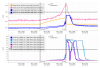

I was able to finish taking the final few DAMP Y @ 20% gain for H1SUSPRM M1 to M1.

Settings

- PRM aligned

- DAMP Y gain to 20% (L and P gains nominal)

- CD state to 1

- M1 TEST bank gains all at 1 (nominally P and Y have a gain other than 1)

Measurements

2025-11-11_1700_H1SUSPRM_M1toM1_CDState1_M1YawDampingGain0p1_WhiteNoise_{L,T,V,R,P,Y}_0p02to50Hz.xml r12789

- I took the measurements for V, R, P, and Y today since Jeff had taken L and T a couple weeks ago for this configuration

- To try and lessen confusion, I changed date/time of all M1 to M1 PRM DAMP Y gain @ 20% measurements to 2025-11-11_1700 so they're all together. This means the M1 to M1 L and T measurements from a couple weeks ago now have this as the date/time

SRM

DAMP {L,P,Y} gain at 20%

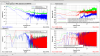

I took HAM5 SUSPOINT to SRM M1 measurements

Settings

- SRM aligned

- DAMP {L,P,Y} gain to 20%

- CD state to 1

Measurements

2025-11-11_1630_H1ISIHAM5_ST1_SRMSusPoint_M1LPYDampingGain0p1_WhiteNoise_{L,T,V,R,P,Y}_0p02to50Hz.xml r12790

- P and Y don't have very good coherence, but I ran out of time to try larger gains

Big measurement list:

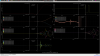

PRM measurements:

DAMP gain LPY @ 20% (-0.1)

/ligo/svncommon/SusSVN/sus/trunk/HSTS/H1/PRM/

SUSPOINT to M1

Common/Data/2025-11-04_1800_H1ISIHAM2_ST1_PRMSusPoint_M1LPYDampingGain0p1_WhiteNoise_{L,T,V,R,P,Y}_0p02to50Hz.xml

M1 to M1

SAGM1/Data/2025-10-28_H1SUSPRM_M1toM1_CDState1_M1LPYDampingGain0p1_WhiteNoise_{L,T,V,R,P,Y}_0p02to50Hz.xml

M2 to M1

SAGM2/Data/2025-10-28_H1SUSPRM_M2toM1_CDState2_M1LPYDampingGain0p1_WhiteNoise_{L,P,Y}_0p02to50Hz.xml

M3 to M1

SAGM3/Data/2025-10-28_H1SUSPRM_M3toM1_CDState2_M1LPYDampingGain0p1_WhiteNoise_{L,P,Y}_0p02to50Hz.xml

DAMP gain Y @ 20% (-0.1)

/ligo/svncommon/SusSVN/sus/trunk/HSTS/H1/PRM/

SUSPOINT to M1

Common/Data/2025-11-04_1930_H1ISIHAM2_ST1_PRMSusPoint_M1YawDampingGain0p1_WhiteNoise_{L,T,V,R,P,Y}_0p02to50Hz.xml

M1 to M1

SAGM1/Data/2025-11-11_1700_H1SUSPRM_M1toM1_CDState1_M1YawDampingGain0p1_WhiteNoise_{L,T,V,R,P,Y}_0p02to50Hz.xml

M2 to M1

SAGM2/Data/2025-10-28_H1SUSPRM_M2toM1_CDState2_M1YawDampingGain0p1_WhiteNoise_{L,P,Y}_0p02to50Hz.xml

M3 to M1

SAGM3/Data/2025-10-28_H1SUSPRM_M3toM1_CDState2_M1YawDampingGain0p1_WhiteNoise_{L,P,Y}_0p02to50Hz.xml

SRM (so far)

DAMP gain LPY @ 20% (-0.1)

/ligo/svncommon/SusSVN/sus/trunk/HSTS/H1/SRM/

SUSPOINT to M1

Common/Data/2025-11-11_1630_H1ISIHAM5_ST1_SRMSusPoint_M1LPYDampingGain0p1_WhiteNoise_{L,T,V,R,P,Y}_0p02to50Hz.xml