Sheila, Ibrahim

Context: ALSY has been misbehaving (which on its own is not new). Usually, problems with ALS locking pertain to an inability to attain higher magnitude flashes. However, in recent locks we have consistently been able to reach values of 0.8-0.9 cts, which is historically very lockable, but ALSY has not been able to lock in these conditions. As such, Sheila and I investigated the extent of misalignment and mode-mismatching in the ALSY Laser.

Investgiation:

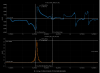

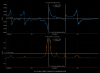

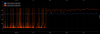

We took two references, a "good" alignment, where ALSY caught swiftly with minimal swinging, and a "bad" alignment where ALSY caught with frequent suspension swinging. We then compared their measured/purported higher order mode widths and magnitudes. The two attached screenshots are from two recent locks (last 24hrs) from which we took this data. We used the known Free Spectral Range and G-factor along with the ndscope measurements to obtain the higher order mode spacing and then compared this to our measurements. While we did not get exact integer values (mode number estimate column), we convinced ourselves that these peaks were indeed our higher-order modes (to a certain extent that will be investigated more). After confirming that our modes were our modes, we then calculated the measured power distribution for these modes.

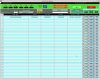

The data is in the attached table screenshot (copying it was not very readable).

Findings:

- Both alignments were found to be pretty bad, so can't really compare to one another. Evidenced by similarity in fractional magnitude.

- Magnitude distribution in M(0,0) shows that only 70% of our power is going into the main 0,0 mode, implies a bad alignment since we're reading 0.9+ on both of these count values, and these are supposed to be normalized to 1.

- ~18% of our power is going into the first higher order mode, which implies a misalignment

- ~6.5% of our power is going into the second order mode, which implies a mode mismatch (and also some misalignment).

Next:

- Take more data points of actually good alignments that may have a higher distribution of power in its 0,0 mode (as designed). 70% is apparenltly quite bad alignment/mode-match wise

- See if there's a particular difference between different successful ALSY lock counts

- Since we were quite misaligned, do the same investigation for ALSX and guage

Investigation Ongoing