Ibrahim, Rahul

This alog follows alog 79032 and is an in-depth investigation of the F3 OSEM's percieved instability.

From last alog:

"The nicest sounding conclusion here is that something is wrong with the F3 OSEM because it is the only OSEM and/or flag involved in L, P, Y (less coherent measurements) but not in the others; F3 fluctuates and reacts much more irratically than the others, and in Y, the F3 OSEM has the greatest proportion of actuation than P and a higher magnitude than L, so if there were something wrong with F3, we'd see it in Y the loudest. This is exactly where we see the loudest ring-up."

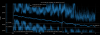

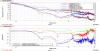

I have attached a gif which shows the free-hanging F3 OSEM moving much more than the others and percieveably so. I have also attahched an ndscope visualization of this movement, clearly showing that F3 is actuating harder/swinging wider than F1 and F3 (screenshot 1). This was percieved to a higher degree during the TF excitations and my current guess is that this is exactly what we're seeing in terms of the 1.5-6hz noisiness that is persistent in all of our TFs in varying degrees. Note that this does not need to be a sensor issue but could be a mechanical issue whereby an instability rings modes along this frequency and this OSEM is just showing us this in the modes that it rings up/actuates against the most. i.e. P, L and Y.

Investigation:

The first thing I did was take the BOSEM noise spectra whilst also having F1 and F2 as stable controls. While slightly noisy, there was no percieved discrepancy between the spectra (screenshot 2). There are some peaks and troughs around the problem 1.5-6hz area though but I doubt these are too related. In this case, we may have a mechanical instability on our hands.

The next thing I did was trend the F1 and F3 OSEMs to see if one is percieveably louder than the other but they were quite close in their amplitudes and the same in their freq (0.4hz) (screenshot 3). I used the micron counts here.

The last and most interesting thing I did was take another look at the F3, F2 and F1 trend of the INMON count (screenshot 1) and indeed it shows that F3 oscillation does take place at around 2Hz, which is where our ring-up is loudest across the board. Combined with the clean spectra, this further indicates that there is a mechanical issue at these frequencies (1.5-6hz).

Rahul suggested that maybe the pitch adjuster was unlocked and was causing some differential pitch as the OSEMs tend to catch up and this may be the case, so I will go check this soon. This pitch adjuster thing also may affect another issue we are having, which is OSEM Count Drift (a seperate alog, coming soon to a workstation near you).

Conclusion:

There must be an issue, not with the sensor systems, but mechanically. Due to a recent history in hysteresis, this may be the case on a less percieveable level. Another potential culprit is rising Staging Building temps differentially screwing with blades(Rahul's thought since there was a measured 2F change between yesterday and 3 days ago). Will figure out next steps pending discussion with the team.