Andrei, Naoki

Following 78422, we did FC visibility and ringdown measurement to get the FC loss and finesse. The measured FC loss is 44+-27 ppm, which is consistent with before within the error. The measured FC finesse is 8000-11000, which is higher than expected value ~6700. We don't know why the estimated finesse is higher than before, but both results would indicate that there is no obvious excess FC loss compared to before.

After the cabling in 67409, the OPO dither lock did not work well as before. We increased the dither lock gain from -10 to -30 and the dither lock got much better. The SDF is accepted.

We needed to flip the sign of FC CMB to lock the FC green. We engaged one boost in FC CMB as before.

We turned off LP100 in FC IR trans PD. We reduced the seed power down to 0.2 mW. More than 0.2 mW seed saturated the FC IR trans PD without LP100.

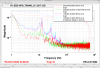

For visibility measurement, we put the seed on resonance of FC by adjusting green VCO tune and put the large offset on green VCO tune to make the seed off resonance of FC. The first attachment shows the visibility measurement.

Using the formula in TAMA paper, Andrei calculated the FC loss. The calculated FC loss is 44+-27 ppm assuming 909 ppm of FC1 transmissivity and 2% of misalignment, mode mismatch. The FC misalignment and mode mismatch are not measured recently, but they do not affect the result much.

The seed lock on FC is not very stable so the seed dither lock for FC is necessary for more precise measurement.

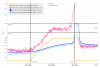

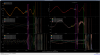

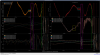

For ringdown measurement, putting the large offset on green VCO tune is not fast enough. We requested OPO guardian to DOWN. The second and third attachment show the ringdown measurement for TRANS and REFL. The measured finesse was 11000 for TRANS and 8000 for REFL, which is higher than expected value ~6700.