Here are some plots that show the fuctioning of the fast shutter, before, during and after the June 7th pressure spikes. The fast shutter functions the same way before and after the pressure spikes. However, in the locking attempts with the HAM6 alignment different the beam going to AS_C was clipped, and this meant that the fast shutter didn't block the beam on AS_A and AS_B as quickly as it normally does.

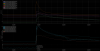

The first attachment shows a lockloss from June 6th, the lockloss before the pressure spikes started. The fast channel that records power on the shutter trigger diode (H1:ASC-AS_C_NSUM_OUT_DQ) is calibrated into Watts arriving in HAM6. Normally these NSUM channels are normalized by the input power scaling, but as the simulink screenshot shows that is not done in this case. Using the time that the power on AS_A is blocked, the shutter triggered when there was 0.733 Watts arriving in HAM6, and the light on AS_A which is behind the shutter is blocked. There is a bounce, where the beam passes by the shutter again 51.5 ms after it first closes, this bounce last 15ms and in that time the power into HAM6 rises to 1.3W. In total, AS_A (and AS_B) where saturated for 24ms. This pattern is consistent for 4 locklosses in several locklosses from higher powers, with the normal alignment on AS_C, both before and after the pressure spikes in HAM6.

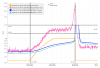

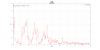

In the first lockloss with a pressure spike, 78308, the interferometer input power was 10W, rather then the usual 60W, and the alignment into HAM6 was in an unusual state. The shutter triggered when the input power was 0.3Watts according to AS_C, which was clipped at the time and so is underestimating the power into HAM6. The trend of power on AS_A and AS_B was different this time, with what looks like two bounces and a total of 35ms of time when AS_A was saturated. The first bounce is about 14ms after the shutter first triggers, but the beam isn't unblocked enough to satrate the diode, and a second bounce saturates the diode 36ms after the shutter first closed, and lasted 26ms, during which time the power into HAM6 rose to 0.55W. The power on AS_C peaked about 250ms after the shutter triggered, at about 1W onto AS_C. Keita is going to look at energy deposited into HAM6 in typical locklosses, where AS_C is not clipping as that will be more accurate. The pressure spike shows up on H0:VAC-LY_RT_PT152_MOD2_PRESS_TORR about 1.5 seconds after the power peaks on AS_C, and peaks at 1.1-7 Torr.

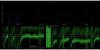

At the next pressure spike, the interferometer was locked with 60W input power, and AS_A was saturated for 80ms before the shutter triggered, and there was no bouncing. This time the pressure rise was recorded 2 seconds after the lockloss, and 1.8 seconds after the power on AS_C peaked, this was a larger pressure spike than the first at 1.3e-7 Torr.

The third pressure spike was also a 60W lockloss, with AS_A saturated for 80ms and no bounce from the AS shutter visibile. The pressure rise was recorded 2.1 seconds after the lockloss, and was 3.1e-7 Torr.

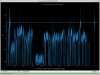

The final attachment shows the first lockloss after we reverted the alignment in AS_C, when the fast shutter behavoir follows the same pattern seen before the pressure spikes happened.